Step

By Step Instructions on Installing Oracle 10g Clusterware Software (10.2.0.1)

32-bit on Redhat AS 3 x86 (RHEL3) /

CentOS 3 x86

By

Bhavin Hingu

This

document explains the step by step process of installing Oracle 10g R2

(10.2.0.1) Clusterware Software using OUI.

Installing Oracle10g (10.2.0.1) Clusterware Software:

Task List:

Shutdown

any running Oracle Processes

Determine

Oracle Inventory Location

Setting

Up oracle user Environment

Running

OUI (oracle Universal Installer) to install 10g RAC Clusterware

Verify

Virtual IP network config.

Verifying

Oracle Clusterware Background Processes

If you are installing Oracle Clusterware on a node

that already has a single-instance Oracle Database 10g installation, then stop

the existing ASM instances. After Oracle Clusterware is installed, start up the

ASM instances again. When you restart the single-instance Oracle database and

then the ASM instances, the ASM instances use the Cluster Synchronization

Services (CSSD) Daemon instead

of the daemon for the single-instance Oracle database. You can upgrade some or

all nodes of an existing Cluster Ready Services installation. For example, if

you have a six-node cluster, then

you can upgrade two nodes each in three upgrading sessions. Based the number of

nodes that you upgrade in each session on the load the remaining nodes can

handle. This is called a "rolling upgrade."

If a Global Services Daemon (GSD) from Oracle9i

Release 9.2 or earlier is running, then stop it before installing Oracle Database

10g Oracle Clusterware by running the following

command:

ORACLE_HOME/bin/gsdctl stop

Caution:

If

you have an existing Oracle9i Release 2 (9.2) Oracle Cluster Manager (Oracle CM)

installation, then do not shut down the Oracle CM service. Doing so

prevents the Oracle Clusterware 10g Release 2 (10.2)

software from detecting the Oracle9i Release 2 node

list, and causes failure of the Oracle Clusterware installation.

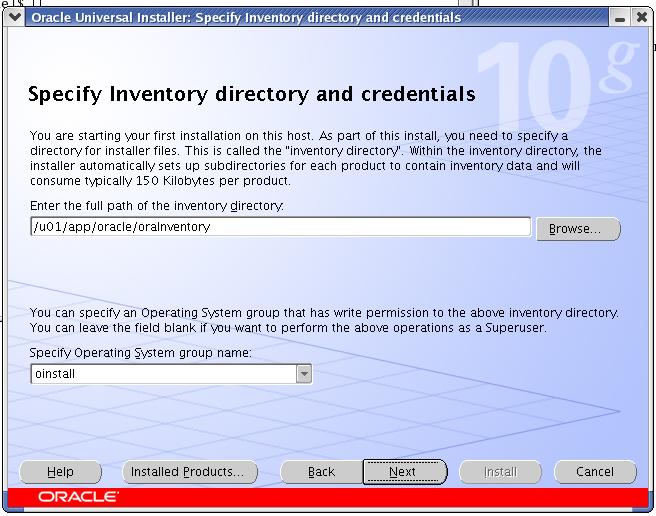

If you have already installed Oracle software on

your system, then OUI detects the existing Oracle Inventory directory from the /etc/oraInst.loc file, and uses this location.

If you are installing Oracle software for the first

time on your system, and your system does not have an Oracle inventory, then

you are asked to provide a path for the Oracle inventory, and you are also

asked the name of the Oracle Inventory group (typically, oinstall).

Setting Up Oracle Environment:

Add the below lines into the .bash_profile under the

oracle home directory (usually /home/oracle) to set the ORACLE_BASE and ORACLE_SID

set in the session.

export ORACLE_BASE=/u01/app/oracle

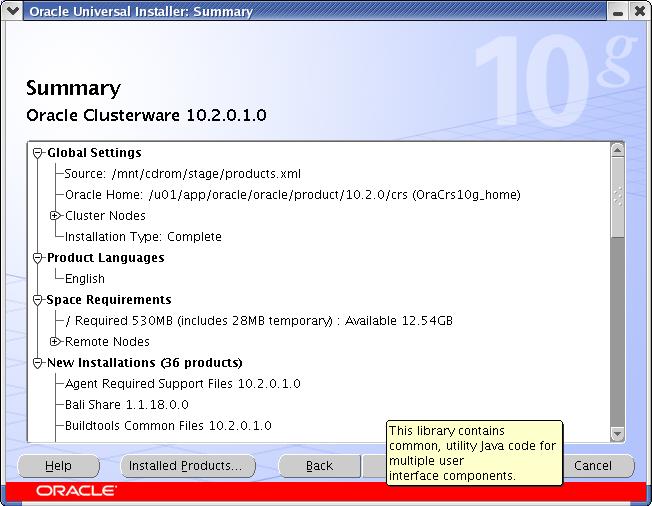

Running OUI (Oracle Universal Installer) to install

Oracle Clusterware:

Complete the following steps to install Oracle

Clusterware on your cluster. You need to run the runInstaller from ONLY ONE

node (any single node in the cluster).

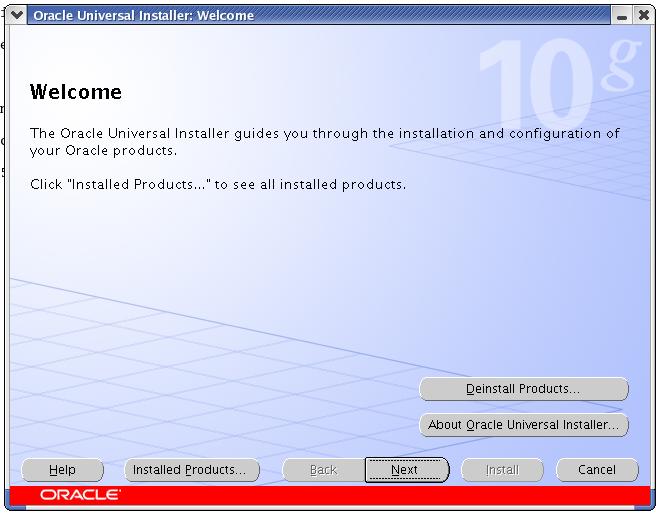

Start the runInstaller

command as oracle user from any one node When OUI displays the Welcome page,

click Next

Xlib: connection to ":0.0" refused by server

Xlib: No protocol specified

Can't connect to X11 window server using ':0.0' as the

value of the DISPLAY variable.

If

you get the above error, please execute the below command as root and then

start the runInstaller by connecting as oracle.

[root@node1-pub root]#

xhost +

access control disabled, clients can connect from any host

[root@node1-pub root]# su - oracle

[oracle@node1-pub oracle]$ /mnt/cdrom/runInstaller

CLICK Next

CLICK Next

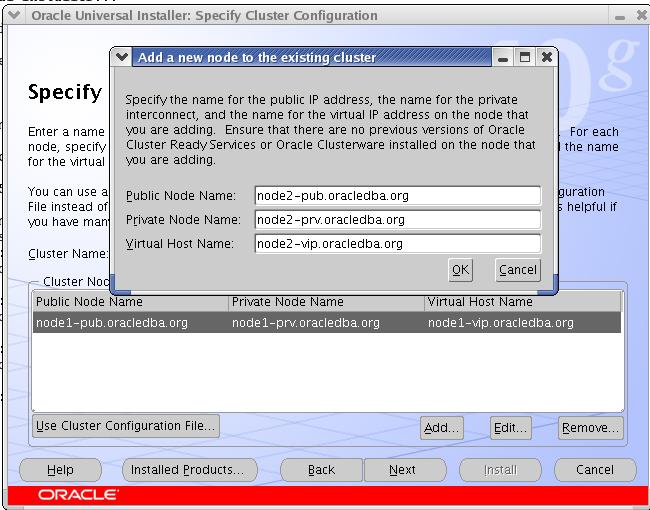

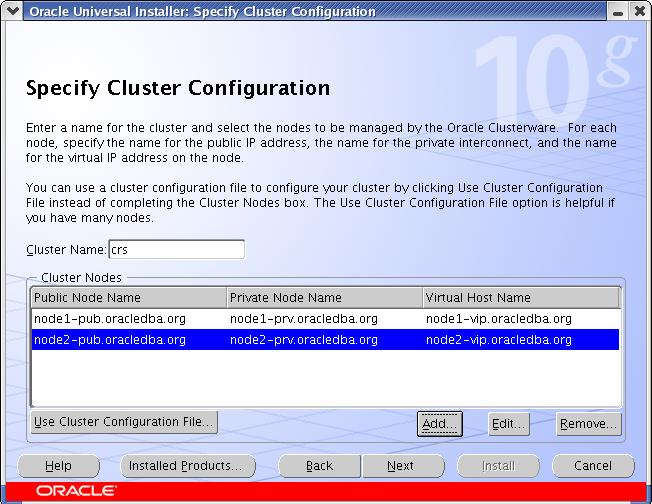

CLICK Next

At this step, you should not receive any error. If you have configured the

Pre-Installation steps correctly, then you will not get any errors. I get one

warning here as you can see which

is complaining about the low memory than required. I had only 512 MB ram and

the required memory is 1GB but I would not worry about this warning and will

check the status box.

CLICK Next

CLICK Next

Check whether the interface has correct subnet mask and type associated to it.

If you have configured the network for all the nodes correctly as explained in

Pre-Installation task, then you would not get any error message at this step.

CLICK Next

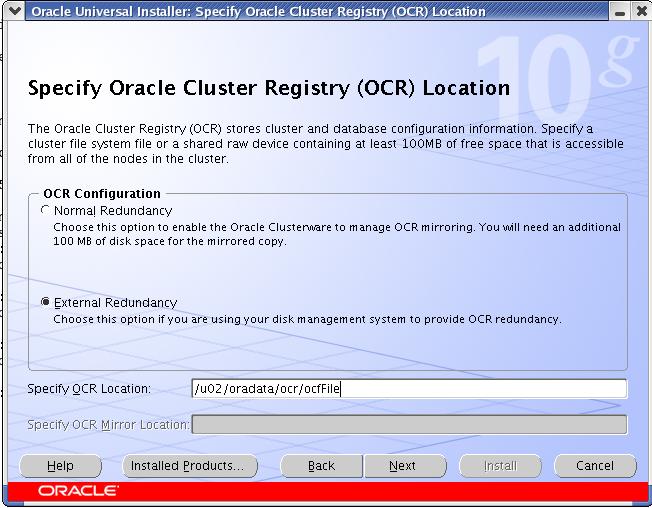

Enter the filename and location (mount point) for the OCR file. In the Pre-Installation

steps, I have configured ocfs for this file to store. I have used the same

mount point (/u02/oradata/ocr) to store them. I have

chosen the External redundancy just for experiment purpose. On production

server, You make sure that you have one extra mountpoint created on separate

physical device to store the mirror file to avoid SPF (Single Point Of Failure)

CLICK Next

Use the same mount point as OCR file and enter the filename you want for Voting

Disk file. If you choose the External Redundancy, then you need to mention only

one location.

CLICK Next

CLICK Next

At this step, You may get the error message

complaining about the timestamp mismatch among the nodes. Please make sure that

all the nodes have timestamp as close as possible. (Try to make hh24:mi level match).

When you execute the above scripts on all the nodes, you should get the below

output.

NOTE: The node from which you are installing the Clusterware software is the

node that will be registered as node 1 in the Cluster registry. Here, I have

installed the clusterware from machine called "node2-pub" and so it

became node 1 to the Clusterware. This will not change the behavior of RAC.

[root@node1-pub

root]# /u01/app/oracle/oracle/product/10.2.0/crs/root.sh

WARNING: directory '/u01/app/oracle/oracle/product/10.2.0' is not owned by root

WARNING: directory '/u01/app/oracle/oracle/product' is not owned by root

WARNING: directory '/u01/app/oracle/oracle' is not owned by root

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

WARNING: directory '/u01' is not owned by root

Checking to see if Oracle CRS stack is already configured

/etc/oracle does not exist. Creating it now.

Setting the permissions on OCR backup directory

Setting up NS directories

Oracle Cluster Registry configuration upgraded successfully

WARNING: directory '/u01/app/oracle/oracle/product/10.2.0' is not owned by root

WARNING: directory '/u01/app/oracle/oracle/product' is not owned by root

WARNING: directory '/u01/app/oracle/oracle' is not owned by root

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

WARNING: directory '/u01' is not owned by root

clscfg: EXISTING configuration version 3 detected.

clscfg: version 3 is 10G

Release 2.

assigning default hostname node1-pub for node 1.

assigning default hostname node2-pub for node 2.

Successfully accumulated necessary OCR keys.

Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897.

node <nodenumber>:

<nodename> <private interconnect name>

<hostname>

node 1: node1-pub node1-prv node1-pub

node 2: node2-pub node2-prv node2-pub

clscfg: Arguments check out successfully.

NO KEYS WERE WRITTEN. Supply -force parameter to override.

-force is destructive and will destroy any previous cluster

configuration.

Oracle Cluster Registry for cluster has already been initialized

Startup will be queued to init within 90 seconds.

Adding daemons to inittab

Expecting the CRS daemons to be up within 600 seconds.

CSS is active on these nodes.

node1-pub

node2-pub

CSS is active on all nodes.

Waiting for the Oracle CRSD and EVMD to start

Oracle CRS stack installed and running under init(1M)

Running vipca(silent) for configuring nodeapps

Creating VIP application resource on (2) nodes...

Creating GSD application resource on (2) nodes...

Creating ONS application resource on (2) nodes...

Starting VIP application resource on (2) nodes...

Starting GSD application resource on (2) nodes...

Starting ONS application resource on (2) nodes...

Done.

[root@node1-pub root]#

CLICK OK Button

CLICK Exit

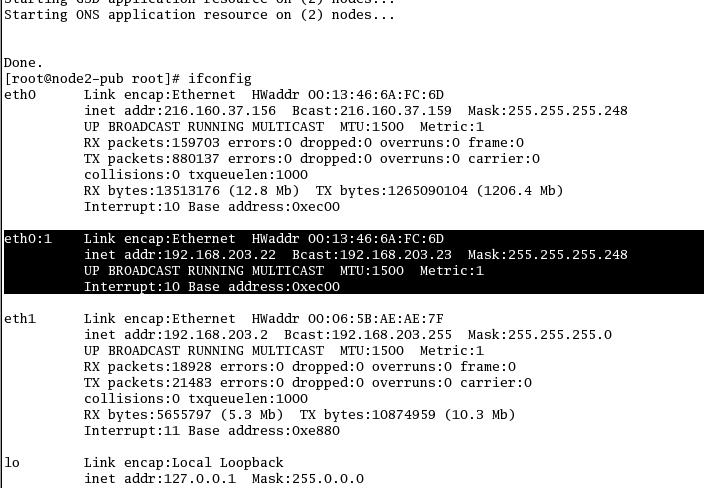

Verifying Virtual IP

Network config:

Now, verify that the virtual IP is configured on eth0 by executing the below

command.

Verifying Oracle

Clusterware Background Processes:

The following processes must be running in your

environment after the Oracle Clusterware installation for Oracle Clusterware to

function:

evmd: Event manager

daemon that starts the racgevt

process to manage callouts.

ocssd: Manages cluster

node membership and runs as oracle

user; failure of this process results in node restart.

crsd: Performs high

availability recovery and management operations such as maintaining the OCR.

Also manages application resources and runs as root user and restarts automatically upon failure.