Step By Step: Install Oracle 10g R2

Clusterware on Oracle Enterprise Linux 5.5 (32 bit) Platform.

By Bhavin Hingu

Get ready with the RAC

Architecture Diagram and RAC Setup Information about Cluster Name, Node Names,

VIPs, and Inventory Location.

3-Node

RAC Architecture:

|

Machine |

Public Name |

Private Name |

VIP Name |

|

RAC Node1 |

node1.hingu.net |

node1-prv |

node1-vip.hingu.net |

|

RAC Node2 |

node2.hingu.net |

node2-prv |

node2-vip.hingu.net |

|

RAC Node3 |

node3.hingu.net |

node3-prv |

node3-vip.hingu.net |

Cluster Name: lab

Public Network: 192.168.2.0/eth2

Private network:

192.168.0.0/eth0

Oracle

Clusterware Software (10.2.0.1):

Server:

All the RAC Nodes

ORACLE_BASE:

/u01/app/oracle

ORACLE_HOME: /u01/app/oracle/crs

Owner:

oracle (Primary Group: oinstall, Secondary Group: dba)

Permissions:

775

OCR/Voting

Disk Storage Type: Raw Devices

Oracle

Inventory Location: /u01/app/oraInventory

Oracle

Database Software (RAC 10.2.0.1) for ASM_HOME:

Server:

All the RAC Nodes

ORACLE_BASE:

/u01/app/oracle

ORACLE_HOME:

/u01/app/oracle/asm

Owner:

oracle (Primary Group: oinstall, Secondary Group: dba)

Permissions:

775

Oracle

Inventory Location: /u01/app/oraInventory

Listener:

LISTENER (TCP:1521)

Oracle

Database Software (RAC 10.2.0.1) for DB_HOME:

Server:

All the RAC Nodes

ORACLE_BASE:

/u01/app/oracle

ORACLE_HOME:

/u01/app/oracle/db

Owner:

oracle (Primary Group: oinstall, Secondary Group: dba)

Permissions:

775

Oracle

Inventory Location: /u01/app/oraInventory

Database

Name: labdb

Listener:

LAB_LISTENER (TCP:1530)

Install 10gR2 Clusterware software:

Start the runInstaller from Clusterware

Software Location:

[oracle@node1

~]$ /home/oracle/10gr2/clusterware/runInstaller -ignoreSysPrereqs

Clusterware

Installation:

Welcome

Screen:

Next

Specify

Inventory Directory and Credentials:

Full Path:

/u01/app/oraInventory

Operating

System group name: oinstall

Specify

Home Details:

Name: CRS_HOME

Path: /u01/app/oracle/crs

Product

Specific Prerequisite Checks:

Manually verified the Operating System Package

Requirement.

Specify

Cluster Configuration:

Cluster

Name: lab

Entered the hostname and VIP names of the Cluster Nodes.

Specify

Network Interface Usage:

Modified

the interface type for “eth2” to “Public”

Modified

the interface type for “eth1” to “Do Not Use”

Left the

interface type for “eth0” to “Private”

Specify OCR

Location:

Choose

Normal Redundancy

OCR

Location: /dev/raw/raw1

OCR Mirror

Location: /dev/raw/raw2

Specify the

Voting Disk Location:

Choose

Normal Redundancy

Voting

Disk Location: /dev/raw/raw3

Additional

Voting Disk 1 Location: /dev/raw/raw4

Additional

Voting Disk 2 Location: /dev/raw/raw5

Summary

Screen:

Verified

the information here and pressed “Finish” to start installation.

At the End of the installation, the two scripts needs to

be executed as root user.

/u01/app/oraInventory/orainstRoot.sh

/u01/app/oracle/crs/root.sh

There is a known issue of root.sh failing with below

error and so, run the root.sh only after adding the below workaround:

Oracle CRS stack installed and running under init(1M)

Running vipca(silent) for configuring nodeapps

/home/oracle/crs/oracle/product/10/crs/jdk/jre//bin/java:

error while loading

shared libraries: libpthread.so.0:

cannot open shared object file:

No such file or directory

The use of srvctl also throws the similar error message.

This is due to the hard coded setting of LD_ASSUME_KERNEL parameter into the

srvctl and vipca executables and this parameter is no longer needed for

Enterprise Linux 5. So, as a workaround, it was required to add the below line

into these two executables right below the “export LD_ASSUME_KERNEL” line on all the nodes. The srvctl from ASM_HOME and DB_HOME home should also be modified

as well make it work correctly.

unset

LD_ASSUME_KERNEL

/u01/app/oracle/crs/bin/srvctl:

….

…

#Remove this workaround when the

bug 3937317 is fixed

LD_ASSUME_KERNEL=2.4.19

export

LD_ASSUME_KERNEL

unset

LD_ASSUME_KERNEL

# Run ops control utility

$JRE $JRE_OPTIONS -classpath $CLASSPATH $TRACE oracle.ops.opsctl.OPSCTLDriver

"$@"

exit

$?

/u01/app/oracle/crs/bin/vipca:

….

…

arch=`uname -m`

if [ "$arch"

= "i686" -o "$arch" = "ia64" ]

then

LD_ASSUME_KERNEL=2.4.19

export

LD_ASSUME_KERNEL

unset

LD_ASSUME_KERNEL

fi

#End workaround

Now, run the root.sh one by one. The root.sh will fail

with the below error on the last node when it is trying to configure the VIPs.

This is because of non-routable IP is selected for VIP (192.168.X.X) which is

normal case. Once failed, exited out of the runInstaller and ran the “vipca” manually to configure the VIPs.

At the end, verify that all the CRS services are up and

running as expected

ssh

node1 /u01/app/oracle/crs/bin/crsctl check crs

ssh

node2 /u01/app/oracle/crs/bin/crsctl check crs

ssh

node3 /u01/app/oracle/crs/bin/crsctl check crs

/u01/app/oracle/crs/bin/crs_stat

–t

/u01/app/oracle/crs/bin/crsctl

query crs activeversion

/u01/app/oracle/crs/bin/crsctl

query css votedisk

/u01/app/oracle/crs/bin/ocrcheck

Here’s the Screenshots of the

Clusterware Installation:

With the Successful installation of 10g R2 Clusterware

(10.2.01), next is to install 10g R2 RAC (10.2.0.1) software for ASM_HOME.

Install 10gR2 RAC software for ASM_HOME

Start the runInstaller from 10gR2 RAC Software Location:

[oracle@node1

~]$ /home/oracle/10gr2/database/runInstaller -ignoreSysPrereqs

RAC Installation for

ASM_HOME:

Welcome

Screen:

Next

Select

Installation Type:

Enterprise

Edition

Specify

Home Details:

Name: ASM_HOME

Path: /u01/app/oracle/asm

Specify

Hardware Cluster Installation Mode:

Cluster

Installation.

All the

Nodes were selected

Product-Specific

Prerequisite Checks:

4

requirement failure messages should be ignored here.

These

messages are coming because the OEL 5 is not recognized as supported OS by the

10gR2 software.

Select

Configuration option:

Configure

ASM.

Configure

ASM:

Specify

Diskgroup Characteristics and Select Disk.

Summary

Screen:

Verified

the information here and pressed “Finish” to start installation.

At the End of the installation, the

below script needs to be executed as root user on all the RAC nodes.

/u01/app/oracle/asm/root.sh

Verify that the asm instance is up and running on all the

nodes. Modify the listener.ora file and update the SID_LIST section with the

ASM instance information as show below on all the RAC Nodes. This is because

the ASM instances are registered with local listeners with the status of

“BLOCKED” which prevents dbca from connecting to ASM at the time of database

creation.

[oracle@node3 admin]$ cat listener.ora

# listener.ora.node3

Network Configuration File:

/u01/app/oracle/asm/network/admin/listener.ora.node3

# Generated

by Oracle configuration tools.

SID_LIST_LISTENER_NODE3 =

(SID_LIST =

(SID_DESC =

(SID_NAME = PLSExtProc)

(ORACLE_HOME = /u01/app/oracle/asm)

(PROGRAM = extproc)

)

(SID_DESC =

(GLOBAL_DBNAME = +ASM)

(SID_NAME = +ASM3)

(ORACLE_HOME = /u01/app/oracle/asm)

)

)

LISTENER_NODE3 =

(DESCRIPTION_LIST =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = node3-vip.hingu.net)(PORT = 1521)(IP = FIRST))

(ADDRESS = (PROTOCOL = TCP)(HOST = 192.168.2.3)(PORT = 1521)(IP = FIRST))

)

)

Install 10gR2 RAC software for Database HOME

Start the runInstaller from 10gR2 RAC Software Location:

[oracle@node1

~]$ /home/oracle/10gr2/database/runInstaller -ignoreSysPrereqs

RAC Installation for

DB_HOME:

Welcome

Screen:

Next

Select

Installation Type:

Enterprise

Edition

Specify

Home Details:

Name: ASM_HOME

Path: /u01/app/oracle/db

Specify

Hardware Cluster Installation Mode:

Cluster

Installation.

All the

Nodes were selected

Product-Specific

Prerequisite Checks:

4

requirement failure messages should be ignored here.

These

messages are coming because the OEL 5 is not recognized as supported OS by the

10gR2 software.

Select

Configuration option:

Install

Database Software Only.

Summary

Screen:

Verified

the information here and pressed “Finish” to start installation.

At the End of the installation, the

below script needs to be executed as root user on all the RAC

nodes.

/u01/app/oracle/db/root.sh

Create the Database Listener (LAB_LISTENER)

Invoke the netca from DB_HOME (/u01/app/oracle/db) to create the listener LAB_LISTENER on

port 1530.

Create 10gR2 RAC Database (labdb)

Invoked the dbca from DB HOME using X terminal:

[oracle@node1 ~]$ dbca

Verified that the labdb is created and

registered to the CRS successfully.

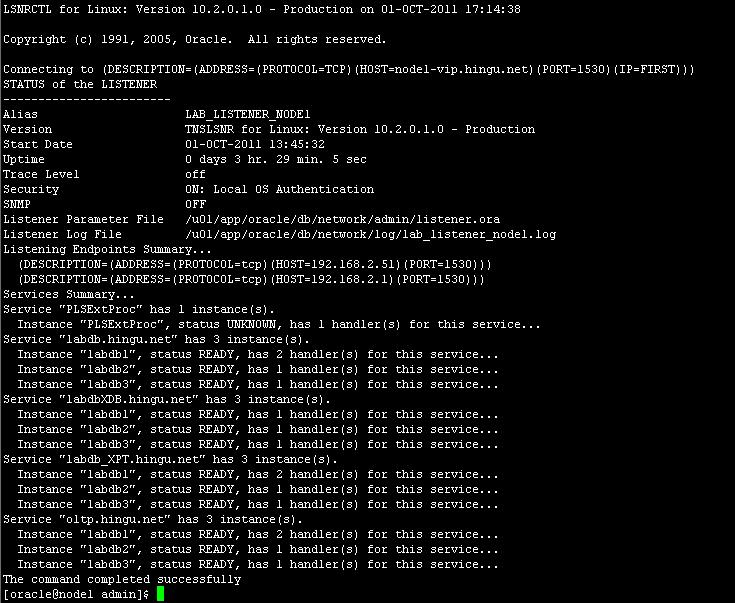

Also, check to see if labdb is registered with the database listener

LAB_LISTENER successfully.

Srvctl config database –d labdb

srvctl

status database –d labdb

srvctl

status service –d labdb

lsnrctl

status LAB_LISTENER_NODE1

lsnrctl

status LAB_LISTENER_NODE2

lsnrctl

status LAB_LISTENER_NODE3

Here’s the Screenshots of the DB

Creation Process:

At the End, Shutdown the complete CRS

stack and take the backup of

OCR and Voting Disk (using dd)

CRS_HOME

ASM_HOME

DB_HOME

Database

OraInventory

Step By Step: Upgrade

Clusterware, ASM and Database from 10.2.0.1 to 10.2.0.3.